AI Cache Control for Security

Securing Semantic Caching and AI Memory in Retrieval-Augmented Generation (RAG) with Secure Enclave Technology

Retrieval-Augmented Generation (RAG) is a powerful architectural design pattern in modern AI applications that enhances Generative AI functionalities by grounding large language models (LLMs) with relevant, factual, and updated information. RAG combines the parametric knowledge of LLMs with non-parametric knowledge from external data sources, enabling more accurate and context-aware responses to user queries. This architectural pattern has gained significant adoption for its ability to improve generative AI outcomes while maintaining relevance and accuracy.

However, to maximize the performance and efficiency of RAG-based AI applications, two critical functionalities need to be addressed before moving to production: semantic caching and AI memory.

These capabilities not only enhance response time and reduce infrastructure costs but also optimize data storage by retaining information from previous conversations.

In this blog, we’ll explore the importance of securing semantic caching and AI memory in RAG workflows, how they improve the efficiency of LLMs, and how Secure Enclave technology ensures these processes remain safe from potential vulnerabilities.

The Role of Retrieval-Augmented Generation (RAG) in Modern AI

Retrieval-Augmented Generation (RAG) enhances LLMs by combining internal knowledge with external, real-time data, grounding responses with factual, up-to-date information.

Key Contribution of RAG: By supplementing the parametric knowledge embedded in LLMs with non-parametric knowledge, RAG ensures that generated responses are more accurate and contextually relevant.

Applications of RAG: RAG is widely adopted in question-answering, content creation, conversational agents, and recommendation systems, where relevance and grounding are critical.

While RAG enhances AI’s response capabilities, semantic caching and AI memory offer additional benefits that extend the usefulness of modern AI applications. These features are essential for improving response latency, reducing infrastructure costs, and optimizing storage.

The Importance of Semantic Caching in RAG Workflows

Semantic caching is a sophisticated process that stores queries and their results based on their contextual meaning rather than exact matches. Unlike traditional caching, which only stores exact replicas of data requests, semantic caching captures the semantics—the meaning and relationships within the queries themselves.

How Semantic Caching Works

In a RAG-based AI system, user queries that are exact matches or contextually similar to previously cached queries benefit from a more efficient information retrieval process. Instead of recalculating responses for every query, the system can retrieve results from the semantic cache, improving response time and reducing the need for redundant computations.

Benefits of Semantic Caching

Faster response times: By reusing cached content based on contextual similarities, semantic caching reduces the time it takes to generate responses.

Reduced infrastructure costs: As the system relies on cached results, there’s less demand on processing power and storage.

Improved user experience: Quicker, more contextually relevant responses enhance user satisfaction and engagement.

Security Challenges in Semantic Caching

While semantic caching delivers performance and cost benefits, it introduces data security risks, particularly in enterprise AI applications. Cached data may contain sensitive business information, intellectual property (IP), or personally identifiable information (PII). If not properly secured, this cache can become a leakage point for sensitive data.

Common vulnerabilities include:

Data retention risks: Cached data may persist longer than necessary, increasing the likelihood of exposure.

Insufficient access control: Traditional Role-Based Access Control (RBAC) models often fail to provide granular enough control, leaving caches vulnerable to unauthorized access.

Data leakage: Cached data may be accessed or exfiltrated without proper encryption, exposing sensitive information.

How Secure Enclaves Protect Semantic Caching

Secure Enclave technology provides a solution to these security challenges by creating an isolated, hardware-protected environment where sensitive data is encrypted and processed. Here’s how Secure Enclaves address the security risks in semantic caching:

- Isolated Execution: Secure Enclaves isolate cached data and embeddings from the broader system, ensuring that even if other parts of the infrastructure are compromised, the cached content remains protected.\

- Granular Access Control with Just-in-Time Policies: Secure Enclaves integrate with access control frameworks like Open Policy Agent (OPA) and WAVE to enforce just-in-time access for cached data. This means that access is granted on a per-request basis, based on the context of the query and user role, minimizing the risk of unauthorized access.\

- End-to-End Encryption: All cached data—whether it’s embeddings, metadata, or query responses—remains encrypted both at rest and in use, preventing unauthorized access.\

- Automated Data Lifecycle Management: Secure Enclaves enable organizations to define strict retention policies, ensuring that cached content is automatically purged when it’s no longer needed. This reduces the window of vulnerability and ensures compliance with data protection regulations.

AI Memory: Extending the Utility of Generative AI

In addition to semantic caching, AI memory allows modern AI systems to retain knowledge across interactions. AI memory systems store historical interactions or session information to provide more contextually aware and personalized responses. This is crucial for applications that require ongoing conversations or need to build upon previous interactions. Security risks in AI memory systems are similar to those in semantic caching, as sensitive data from previous sessions could be stored and accessed later. Secure Enclaves ensure that AI memory systems are equally protected by isolating and encrypting stored session data.

Beyond RBAC: Dynamic Access Control with OPA and WAVE

Traditional RBAC models are not sufficient to protect the sensitive data stored in semantic caches. Modern AI applications require dynamic access control that takes into account the context of each interaction. By integrating Secure Enclaves with Open Policy Agent (OPA) and WAVE, organizations can enforce just-in-time access policies that evaluate requests in real-time and provide the necessary permissions for the specific query at hand. This ensures that cached data is only accessible when it is absolutely necessary, and only to those who are authorized to see it.

Conclusion: Secure Caching is Key to AI Success

As enterprises adopt RAG workflows to enhance the capabilities of LLMs, securing the semantic cache and AI memory becomes a top priority. Without proper security measures, cached data can expose sensitive information, creating risks around compliance, IP theft, and data breaches. By leveraging Secure Enclave technology, organizations can ensure that semantic caches remain protected through isolation, granular access control, and encryption. Integrating access control frameworks like OPA and WAVE further strengthens the security posture by ensuring dynamic, just-in-time access to cached data.

In the evolving landscape of AI, securing these in RAG workflows is essential for balancing performance with security, enabling enterprises to harness the full potential of Generative AI without compromising on data protection.

Download the latest checklist from Gartner on How to Build GenAI Applications Securely now.

Experience Secure Collaborative Data Sharing Today.

Learn more about how SafeLiShare works

Suggested for you

February 21, 2024

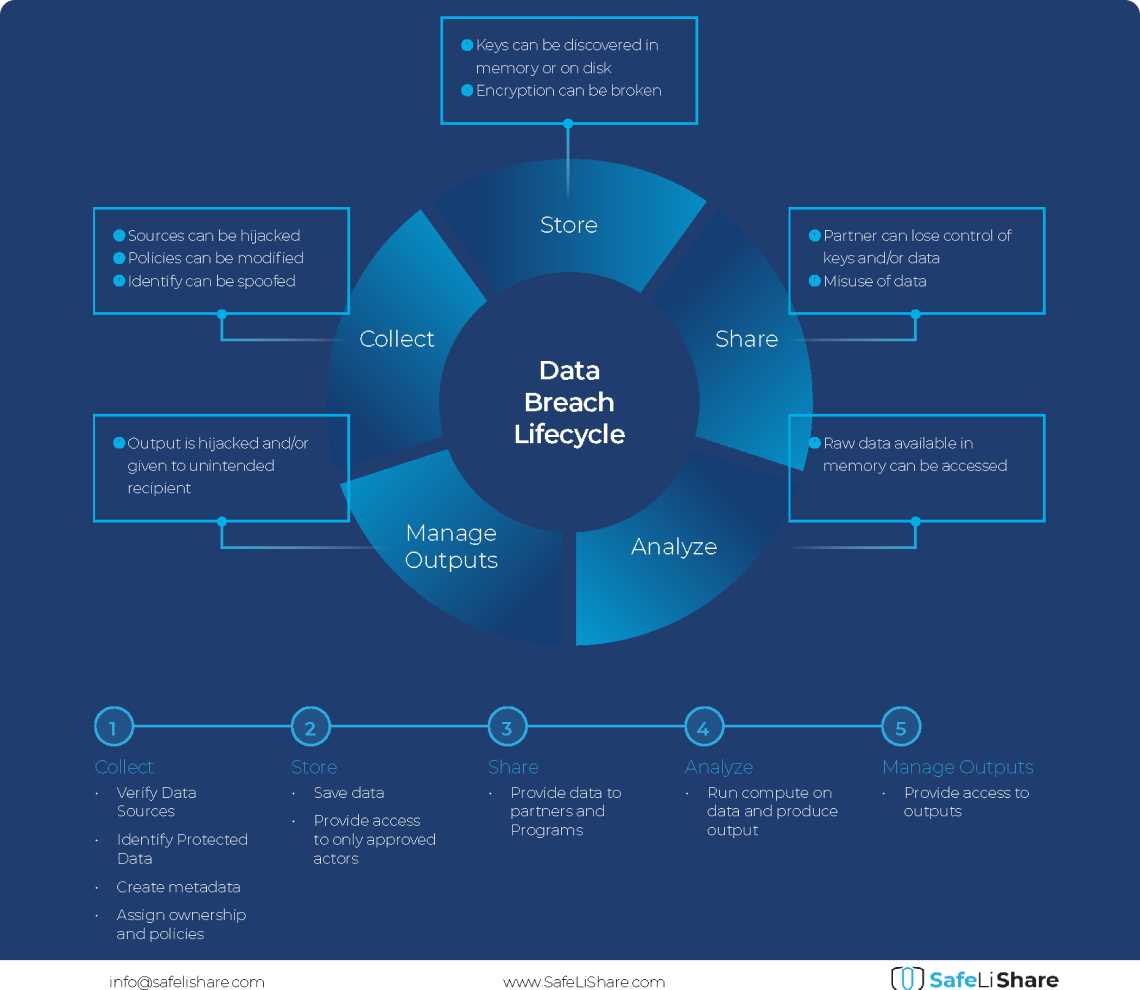

Cloud Data Breach Lifecycle Explained

During the data life cycle, sensitive information may be exposed to vulnerabilities in transfer, storage, and processing activities.

February 21, 2024

Bring Compute to Data

Predicting cloud data egress costs can be a daunting task, often leading to unexpected expenses post-collaboration and inference.

February 21, 2024

Zero Trust and LLM: Better Together

Cloud analytics inference and Zero Trust security principles are synergistic components that significantly enhance data-driven decision-making and cybersecurity resilience.