Real Time Data Monitoring and Compliance

Securing AI Workflows with Secure Enclaves

As AI becomes more integrated into enterprise systems, so too do the risks associated with improper access, data leakage, and malicious actors targeting sensitive assets. The complexity of AI-enabled workflows introduces a wide range of vulnerabilities, particularly when it comes to securing access to valuable data, models, and sensitive consumer information. One solution gaining traction for mitigating these risks is Secure Enclave technology, which provides a Trusted Execution Environment (TEE) that ensures all computations and data within it are isolated, secure, and verifiable.

This blog will explore how Secure Enclaves as a Service (SEaaS) can generate tamper-proof logs, detect anomalous AI behaviors, and enforce strict access control policies in enterprise AI environments. We’ll also discuss how these capabilities can help businesses comply with emerging AI regulations such as those designed to protect Americans from AI-enabled fraud and other data privacy mandates.

Secure Enclaves: The Foundation for AI Security

Secure Enclaves provide a trusted execution environment (TEE) that allows sensitive data and models to be processed in isolation from the rest of the system. These enclaves can ensure that only authorized and authenticated workflows—whether they be AI models, applications, or agents—can enter the enclave and perform run-time computations.

Key Features of Secure Enclaves:

-Isolated Execution: Enclaves shield computations from the rest of the system, ensuring they cannot be tampered with.

-Run-Time Integrity: Secure Enclaves ensure that only authorized code and data are executed, making the environment tamper-proof.

-Cryptographic Verification: Any changes to the enclave or unauthorized access attempts can be flagged and blocked, ensuring the integrity of the execution environment.

This creates a zero-trust environment in which all operations must be verified before proceeding, thereby mitigating many common AI vulnerabilities.

Tamper-Proof Logs and AI Vulnerability Reporting

One of the key capabilities of Secure Enclaves is their ability to generate tamper-proof logs that provide a comprehensive, unalterable record of all operations performed within the enclave. These logs are crucial for auditability, as they ensure that every interaction—whether it’s an AI model requesting data access, a user running a prompt, or an agent performing computations—is fully traceable.

AI Vulnerability and Anomaly Detection

When Secure Enclaves detect anomalous behaviors—such as attempts to access assets for which an application, user, model, or agent does not have privileges—those actions are logged in real-time. Anomalous behaviors might include:

-Multiple failed access attempts to restricted data or resources.

-Large volumes of prompt requests that exceed the usual operational parameters, possibly indicating a denial-of-service or exfiltration attempt.

-Unauthorized attempts to use models or run computations outside defined policies.

These logs not only record such events but can also be configured to trigger real-time alerts and automated responses. By flagging potential threats early, enclaves act as an early warning system for security teams, providing immediate insight into AI vulnerabilities or attacks in progress.

Reporting AI Vulnerabilities

Incorporating Secure Enclaves throughout the entire enterprise—or even across organizations in cross-enterprise collaborations—helps to construct a comprehensive AI behavior mesh. This mesh provides visibility into how AI models and applications interact with sensitive data and resources. For example:

Unusual access patterns or spikes in AI activity can indicate attempts to breach security boundaries.

Repeated model requests for large datasets may suggest that a user or agent is attempting to gather sensitive information for illicit purposes.

By constructing this behavior mesh, red teaming efforts (simulated attacks to identify weaknesses) can become more effective and efficient. The mesh provides a real-time, accurate view of how AI applications behave under both normal and abnormal conditions, allowing teams to rapidly identify and address vulnerabilities.

Compliance with AI Regulations: Protecting Against AI-Enabled Fraud

The recent AI regulation aimed at protecting Americans from AI-enabled fraud introduces a new layer of complexity for businesses developing and using AI. Compliance with these regulations will require AI developers to carefully protect personally identifiable information (PII) and sensitive consumer data.

Secure Enclaves are particularly well-suited to this task, as they enforce strict authentication and authorization protocols for all data and computation requests. This means that only verified users and applications can interact with sensitive data, minimizing the risk of unauthorized access or data leakage.

Data Privacy Mandates and PII Protection

Other data privacy mandates, such as the General Data Protection Regulation (GDPR), have already introduced the requirement for businesses to disclose and hand over PII upon request. Unfortunately, these mandates have often been abused by cybercriminals, who spoof legitimate data requests in an attempt to gain access to sensitive information.

Secure Enclaves address these risks by:

Verifying the identity of all entities that attempt to access PII, preventing spoofing and unauthorized disclosures.

Logging all access attempts in a tamper-proof audit trail that can be used to prove compliance and detect fraudulent behavior.

Encrypting data both at rest and during processing, ensuring that even if data is intercepted, it cannot be accessed or manipulated.

By ensuring that only authorized workflows can access data, Secure Enclaves help businesses meet their compliance obligations without exposing themselves to unnecessary risks.

Tamper-Proof Audits and Cloud Monitoring

Once tamper-proof logs are generated within a Secure Enclave, they can be integrated with cloud monitoring solutions such as Datadog, Cloud Security Posture Management (CSPM), or Cloud-Native Application Protection Platform (CNAPP) tools. These platforms offer comprehensive visibility into the health and security of cloud environments, enabling continuous monitoring of AI workflows.

By feeding Secure Enclave logs into these cloud monitoring solutions, organizations gain a holistic view of how their AI systems operate across the enterprise. Additionally, automated policy enforcement ensures that any unauthorized actions are immediately flagged and handled, further reducing the risk of data breaches.

Reducing AI Risks with Secure Enclaves Across the Enterprise

The deployment of Secure Enclaves throughout the enterprise, and even across multiple organizations, constructs an AI behavior mesh that allows security teams to monitor and analyze AI model behavior in real-time. This visibility helps in mitigating risks like:

-Unauthorized model access: Only authenticated models and users can access the data and perform computations.

-Data exfiltration: Attempts to misuse AI systems to exfiltrate data can be detected and prevented.

-Anomalous prompts: Secure Enclaves detect unusual prompt requests, such as a high volume of queries that could indicate an attempt to flood the system or steal sensitive information.

Secure Enclaves, with their real-time policy enforcement and anomaly detection, enhance the overall security posture of AI applications, while their integration with cloud monitoring solutions ensures that organizations can respond swiftly to emerging threats.

Experience Secure Collaborative Data Sharing Today.

Learn more about how SafeLiShare works

Suggested for you

February 21, 2024

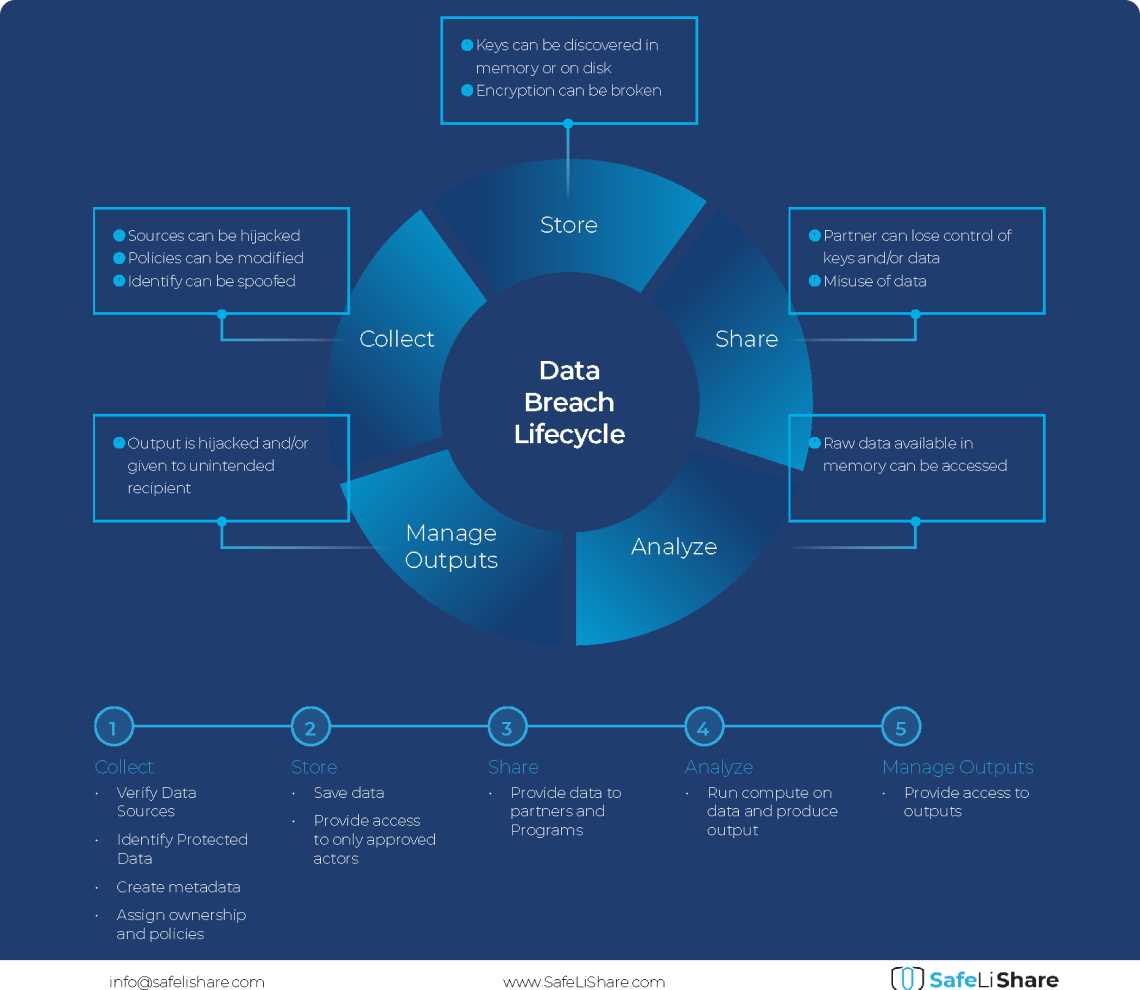

Cloud Data Breach Lifecycle Explained

During the data life cycle, sensitive information may be exposed to vulnerabilities in transfer, storage, and processing activities.

February 21, 2024

Bring Compute to Data

Predicting cloud data egress costs can be a daunting task, often leading to unexpected expenses post-collaboration and inference.

February 21, 2024

Zero Trust and LLM: Better Together

Cloud analytics inference and Zero Trust security principles are synergistic components that significantly enhance data-driven decision-making and cybersecurity resilience.