The Universal AI Security Platform, designed to provide comprehensive security for Large Language Model (LLM) workflows, including Retrieval-Augmented Generation (RAG), training, and inferencing.

Orchestrate

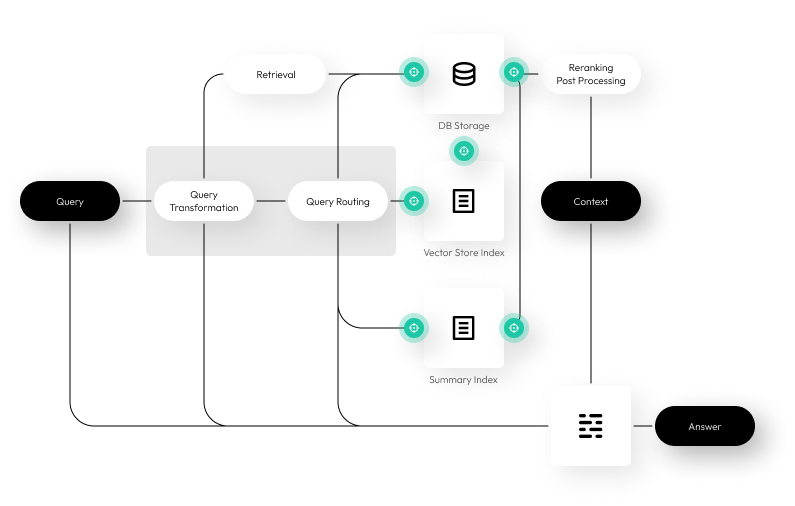

Safely coordinates the access and flow of sensitive data across LLM RAG workflows

Secure

Protects data integrity and confidentiality throughout the embedding, indexing, and inferencing processes

Monitor

Provides real-time visibility and control over data and model interactions to ensure compliance and prevent vulnerabilities

LLM Secure Data Proxy

Orchestrates secure data processing and communication, ensuring that all interactions with LLMs are secure and compliant with organizational policies.

Learn MoreProtects data interactions within large language models, ensuring secure data handling and processing.

Learn MoreCentralizes and manages the lifecycle of secure enclaves, providing runtime encryption protection throughout the LLM chain.

Learn More

Orchestrate, Secure, and Audit your AI capabilities with confidence.