SafeLiShare ConfidentialAI™ Sets LLM Access Boundary

GPT LLM and AI Governance

As ChatGPT and other Large Language Models (LLM) continue to garner massive attention, it is important to understand crucial governance issues in the development and deployment of artificial intelligence systems. While AI systems become more prevalent and powerful, it is essential to establish frameworks and mechanisms to ensure their responsible and ethical use.

LLMs belong to a class of AI models that transform text from an input form to an output form. They can potentially be used in a wide variety of applications such as language summary, language translation, text generation, text understanding, etc. Astonishingly, the operating principle of LLMs can be simply described as finding the most reasonable completion of what has been input up to the current instant. Here, “most reasonable” implies the LLMs best effort to return a completion that most people would find reasonable. Suppose, for example, the input text up to some point is “The most important feature of an automobile is” and the LLM needs to provide the next word. The LLM in question may then compute a set of reasonable completions as automatic transmission, safety systems, air conditioning, off-road capability, lateral acceleration.

The LLM may associate with each possible completion a probability estimate and it may pick the completion that has the highest probability. The question then becomes where do these probabilities come from?

LLM Model Training

This is where a key aspect of LLMs comes to the fore. LLMs arrive at their probability estimates by being trained on vast amounts of input (“billions of web pages”). This is called model training and is truly the workhorse phase of the entire model development process. AI model training in general and LLM training in particular is a data-intensive operation and it is not unusual to see vast data lakes and complex data pipelines being used in the process.

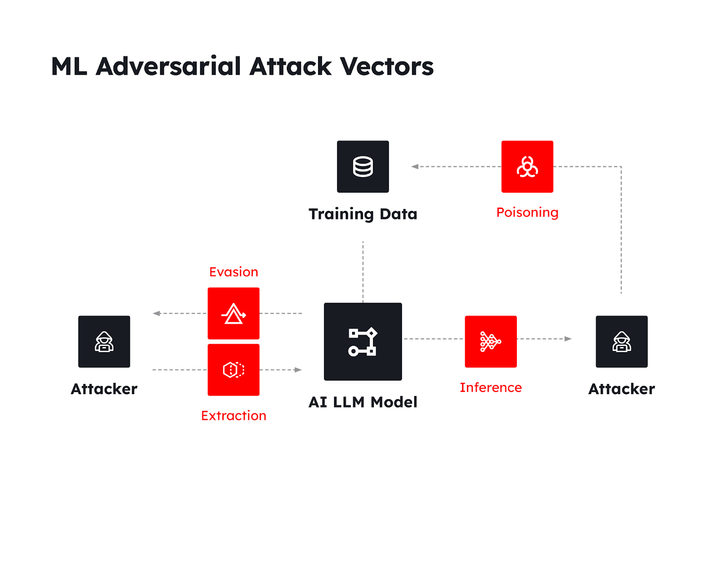

ML Adversarial Attack Vectors

A crucial aspect of LLM training is to protect against data poisoning, an act in which erroneous or malicious data is inputted into the model leading to a corruption of its learning function. Safeguarding data access mechanisms and protecting the data using encryption in store, in transit, and in use, technologies are key to guarding against data poisoning acts. Poisoned data may lead to other problems such as overfitting or underfitting of data, issues that affect both the learning function and the overall performance and duration of the training process.

The AI ML Adversarial Attack Vectors

Attack | Explanation |

Poisoning | Deliberate insertion of malicious data into the training set |

Inference | Determine whether a data point was in the training set |

Extraction | Steal a model by creating a functionally equivalent surrogate of the original model |

Evasion | Provide the model a fabricated input in order to manipulate the model’s output |

Gartner May 2023 Source ID G00778428

A generally accepted maxim in model training is that better data leads to better learnability and usually better data implies sensitive, specific, and perhaps private data. This further highlights the importance of protecting data pipelines from exfiltration and leakage.

Once the LLM has been trained, it is deployed and starts what is called the inference process in which the model responds to inputs from users. The inference phase brings out new threat vectors such as the “inference vector” threat. The goal of this type of threat is to pose a collection of carefully designed and orchestrated questions to the model so that the answers can be analyzed to reveal specific pieces of information such as street addresses or medical conditions of patients1. Another threat to a deployed model is that of outright IP theft.

Yet another threat raises its head when the model has been deployed for some time and is deemed to need manual or algorithmic re-tuning of its hyperparameters which are akin to “global variables” that control the learning rate, number of nodes or hidden layers, etc. Malicious actors gaining access to such hyperparameters may alter the overall performance, effectiveness, and results of the model.

Encryption in Use and Secure Enclave

These new types of threats may be mitigated by using encryption in use technologies that not only encrypts the model using encryption but also encrypts the responses which can only be decrypted by authorized users. Encryption in-use technologies are available if models are deployed using secure enclave technology. However, care must be exercised when using secure enclave technologies. Many LLMs make extensive use of multiple (external) GPUs particularly during training; in such cases as in GPT-4, the connection between the secure enclave and the GPUs may not be secure.

Securing Large Language Model (LLM) delivery in the cloud by using secure enclaves can play a significant role in enhancing the security of these models. Here’s why:

- Data Confidentiality: LLM models are typically trained on vast amounts of data, some of which might be sensitive or confidential. When delivering LLM models in the cloud, secure enclaves provide a trusted execution environment where sensitive data can be processed without exposing it to the underlying infrastructure. This helps protect data confidentiality, as the data remains encrypted and inaccessible to unauthorized parties.

- Model Protection: LLM models themselves can be valuable assets, and securing their delivery is crucial. By leveraging secure enclaves, the models can be deployed within a protected environment, shielding them from potential attacks or unauthorized access. Secure enclaves use hardware-based isolation mechanisms to prevent tampering, reverse engineering, or unauthorized modification of the models.

- Secure Computation: Secure enclaves offer a secure execution environment for computations. LLM models often require significant computational resources to perform tasks like natural language processing or inference. By running the models within a secure enclave, the computations are protected from interference or tampering, ensuring the integrity and confidentiality of the results.

- Protection against Side-Channel Attacks: Secure enclaves provide protection against side-channel attacks, where an attacker attempts to extract information by analyzing the physical characteristics of the system, such as power consumption or timing. By isolating the execution within a secure enclave, the attack surface for side-channel attacks is reduced, enhancing the overall security of the LLM model delivery.

- Regulatory Compliance: Secure enclaves can help meet regulatory requirements for data protection and security. By leveraging the enhanced security provided by secure enclaves, organizations can demonstrate compliance with industry-specific regulations and standards, giving them confidence in delivering LLM models in a secure and compliant manner.

Secure enclaves can significantly enhance the security and access enforcement of LLM model delivery in the cloud. A comprehensive security approach should also include measures and remote attestation such as secure communication protocols, access controls, regular updates and patches, and monitoring mechanisms to ensure holistic protection.

Cloud computing has played a significant role in driving technological innovation in the past decade. Confidential computing is the next-gen cloud computing technology that aims to protect data while it is being processed in memory. It enables organizations to keep sensitive data encrypted and secure even while it is being used by applications and processed in cloud environments.

Major cloud service providers, such as Microsoft Azure, Google Cloud, and Amazon Web Services (AWS), have introduced confidential computing offerings. Nvidia also has a confidential computing offering called NVIDIA Morpheus, a platform that focuses on data privacy and security in AI workflows.

Summary

The use of LLMs and AI models can introduce significant privacy and security challenges, particularly concerning data exposure when entrusted to third-party providers. SafeLiShare has taken on the mission of addressing these privacy and security challenges with our ConfidentialAI™ solution through the entire AI ML model training, serving, and retraining lifecycle. In addition, for generative AI, you can run LLM models in secure enclaves after training. The model is first trained and then the trained model is encrypted and deployed in a secure enclave. The input to the model is also encrypted. Get a demo on SafeLiShare ConfidentialAI or email ai@safelishare.com.

1 One is reminded of the popular game of yesteryears called “Twenty Questions” in which contestants were required to guess the identity of a person based on yes/no responses to 20 questions.

Experience Secure Collaborative Data Sharing Today.

Learn more about how SafeLiShare works

Suggested for you

February 21, 2024

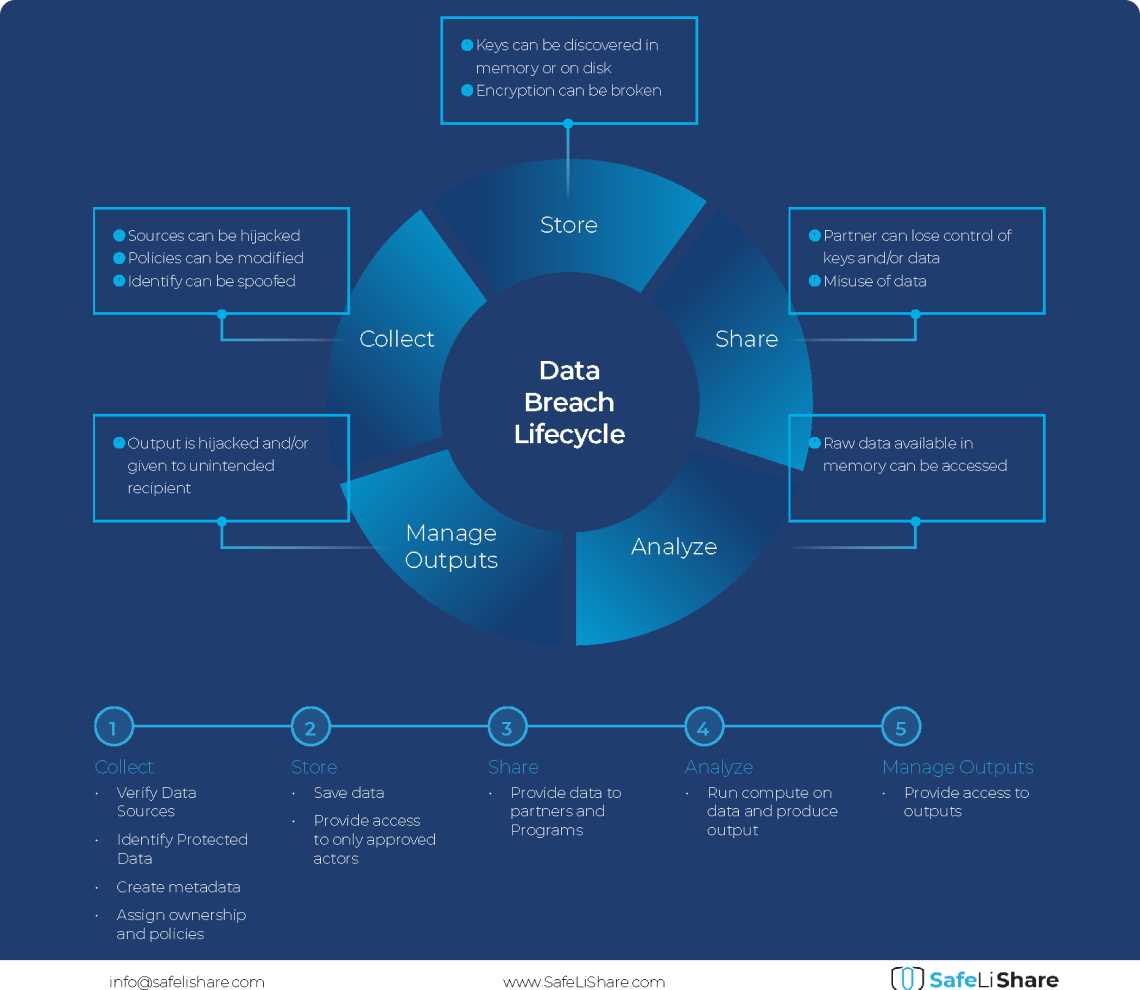

Cloud Data Breach Lifecycle Explained

During the data life cycle, sensitive information may be exposed to vulnerabilities in transfer, storage, and processing activities.

February 21, 2024

Bring Compute to Data

Predicting cloud data egress costs can be a daunting task, often leading to unexpected expenses post-collaboration and inference.

February 21, 2024

Zero Trust and LLM: Better Together

Cloud analytics inference and Zero Trust security principles are synergistic components that significantly enhance data-driven decision-making and cybersecurity resilience.