Secure generative AI LLM adoption using Confidential Computing

Countering Inversion, Inference and Extraction Attacks on ML Models

AI model governance and data privacy are two critical aspects that require careful consideration in the development and deployment of AI systems. AI model governance involves establishing guidelines and protocols to ensure that AI models are developed, validated, and used in an ethical and responsible manner. It includes addressing issues such as bias, fairness, transparency, and accountability in AI decision-making processes. Data privacy, on the other hand, focuses on protecting the confidentiality, integrity, and availability of user data. Organizations must implement robust security measures, obtain informed consent, and comply with data protection regulations to safeguard personal information. Additionally, data anonymization, encryption, and secure data storage practices play a crucial role in preserving data privacy. By prioritizing AI model governance and data privacy, organizations can foster trust, mitigate risks, and uphold the ethical use of AI technology.

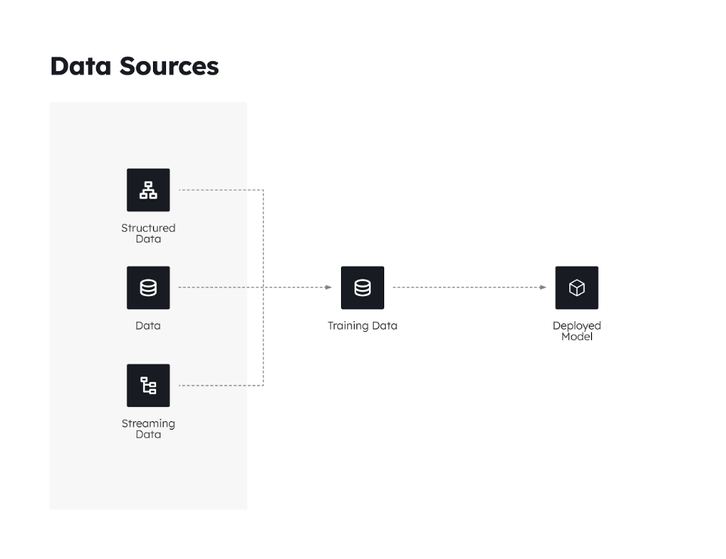

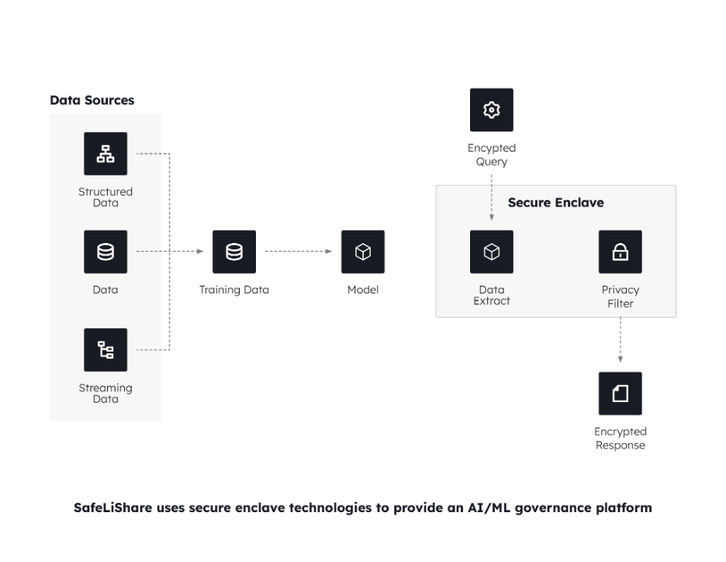

Model, Code, and Data

Machine learning (ML) models, code, and data are interconnected components that interact in a dynamic manner. The ML model acts as the core component responsible for learning patterns and making predictions based on input data. The code serves as the instructions that guide the training and operation of the model. It encompasses algorithms, preprocessing techniques, and optimization strategies. The data, which serves as the fuel for the model, includes both the training data used to train the model and the input data on which the model makes predictions. The code transforms and analyzes the data, extracting meaningful features and applying statistical techniques to train the model effectively. The trained ML model, in turn, can be deployed to process new data and generate predictions. The interaction between the ML model, code, and data is iterative and cyclical, involving continuous refinement of the code and model based on insights gained from the data. This interplay is crucial for the development and improvement of ML models, enabling them to adapt, learn, and deliver accurate predictions.

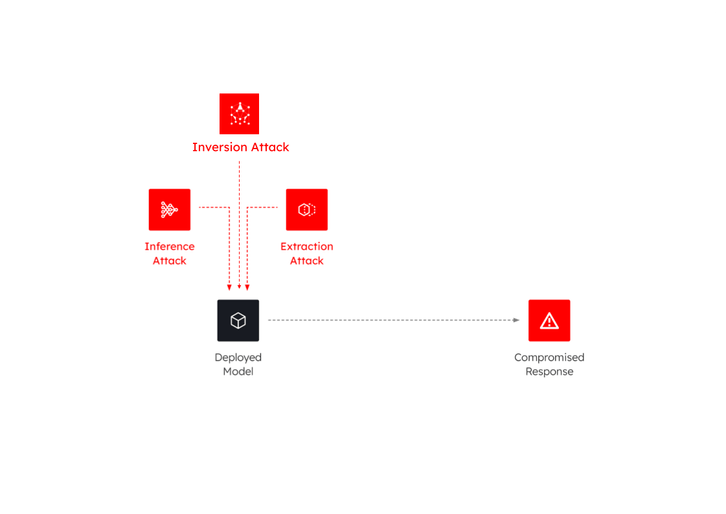

The Rise of AI Privacy Breach

The rise of model adversarial attacks and AI privacy breaches has become a concerning issue in the field of artificial intelligence. Model adversarial attacks refer to deliberate attempts to manipulate AI systems by introducing carefully crafted inputs or queries that can deceive or mislead the models into making incorrect predictions. These attacks exploit vulnerabilities in the models’ decision-making process and can have severe consequences in various domains, including cybersecurity, autonomous vehicles, and financial systems. On the other hand, AI privacy breaches involve unauthorized access or misuse of sensitive data stored or processed by AI systems. As AI systems often rely on vast amounts of personal and confidential data, such breaches can result in identity theft, privacy violations, or even financial loss. Safeguarding AI systems against adversarial attacks and privacy breaches require robust security measures, regular vulnerability assessments, and the implementation of privacy-enhancing technologies such as data encryption, differential privacy, and secure federated learning. It is crucial for organizations and researchers to prioritize the development of resilient AI systems that can withstand adversarial attacks while ensuring the privacy and protection of user data.

Model Identity and Access

Secure enclave technology can play a vital role in safeguarding ML models against some newly emerging threat vectors. We consider three types of threat vectors in this post.

In the first type of attack, once a model has been deployed, adversaries attempt to infer sensitive information from the model by exploiting its outputs. By submitting queries to the model and analyzing the responses, attackers can gain insights into confidential data used during training, such as private images or personally identifiable information. Such attacks are known as Model Inversion attacks.

In another type of attack, called Membership Attacks, attackers exploit the model’s responses to determine whether specific data samples were part of the model’s training set. By submitting carefully crafted queries and analyzing the model’s outputs, adversaries can infer whether a particular sample was used during training, potentially violating user privacy or intellectual property rights.

In a third type of attack called Model Extraction, attackers attempt to steal the deployed model by creating a functionally equivalent model itself.

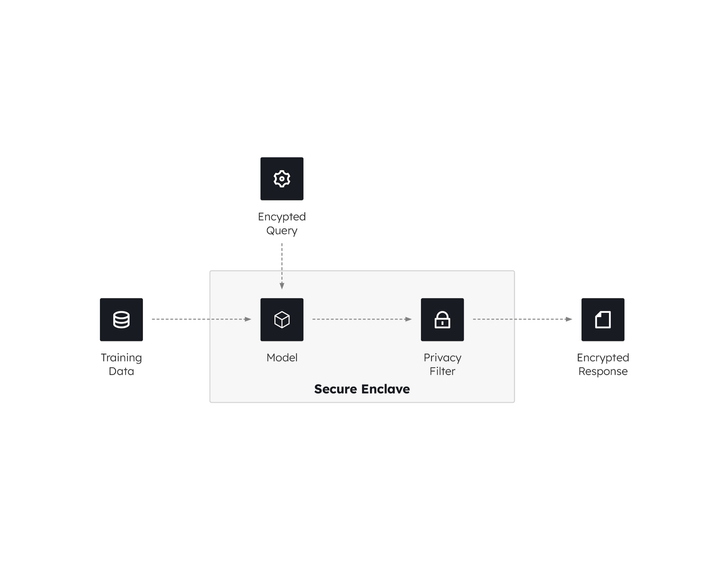

The Need for Secure Enclave and Confidential Computing

The need for a secure enclave and confidential computing arises from the increasing importance of protecting sensitive data and computations in today’s digital landscape. A secure enclave is a trusted execution environment that provides a secure and isolated run-time memory space within a computing system. It enables the processing of sensitive data and execution of critical operations in a protected environment, shielding them from potential threats and unauthorized access.

The increasing pervasiveness of ML models necessitates that Chief Data and Analytics Officers (CDAOs), and their teams prioritize the security of these models. Failure to secure ML models leaves them vulnerable to specific attacks targeting machine learning systems. This vulnerability puts the models at risk, which, in turn, jeopardizes any business or decision-making processes reliant on them. Therefore, it is crucial for CDAOs and their teams to ensure the resilience and protection of ML models to safeguard the integrity and effectiveness of associated business operations and decision-making procedures.

By deploying a model inside a secure enclave, we can ensure that the model is accessed only by authorized entities and that the input queries are encrypted for confidentiality reasons. Secure enclave technology allows the encrypted queries to be decrypted within the enclave and then provided to the model. The outputs of the model are then passed through a privacy filter (e.g., Differential Privacy or De-identification Algorithms) so that any PHI/PII can be removed. Finally, the outputs are encrypted and provided to the user as responses. Thus, the model is protected against Model Inference, Inversion and Extraction types of attacks. Furthermore, by using encryption for input and output, data confidentiality is preserved.

In those cases when the deployed model is large and causes deployment problems in the nascent Trusted Execution Environment technology space, we may pose a query to the trained model that extracts a set of structured answers (e.g., a table of responses) which may then be encrypted and securely stored using a secure enclave technology.

** US Patent Pending **

Experience Secure Collaborative Data Sharing Today.

Learn more about how SafeLiShare works

Suggested for you

February 21, 2024

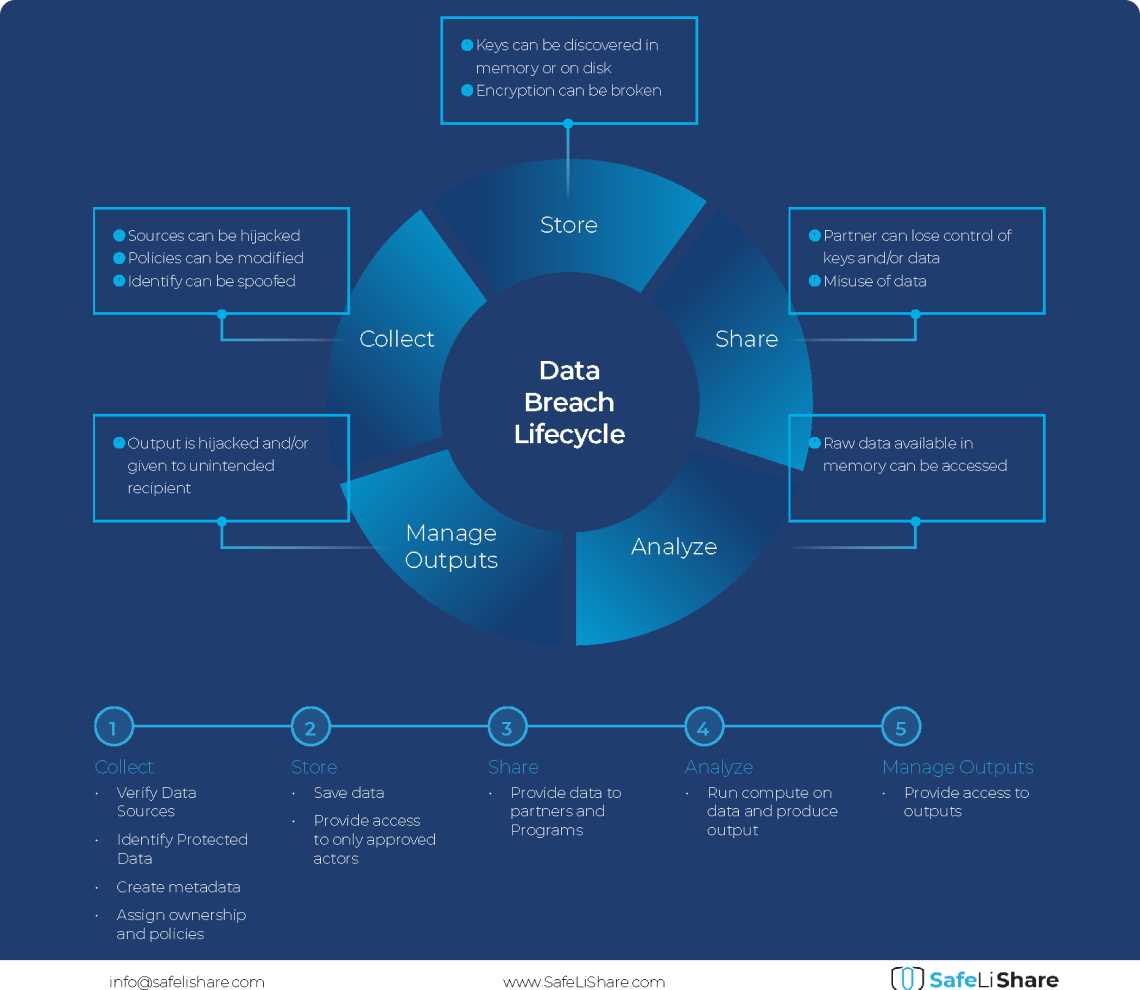

Cloud Data Breach Lifecycle Explained

During the data life cycle, sensitive information may be exposed to vulnerabilities in transfer, storage, and processing activities.

February 21, 2024

Bring Compute to Data

Predicting cloud data egress costs can be a daunting task, often leading to unexpected expenses post-collaboration and inference.

February 21, 2024

Zero Trust and LLM: Better Together

Cloud analytics inference and Zero Trust security principles are synergistic components that significantly enhance data-driven decision-making and cybersecurity resilience.