Webinar

AI Models under Attack: Conventional Controls are not Enough

August 9, 2023

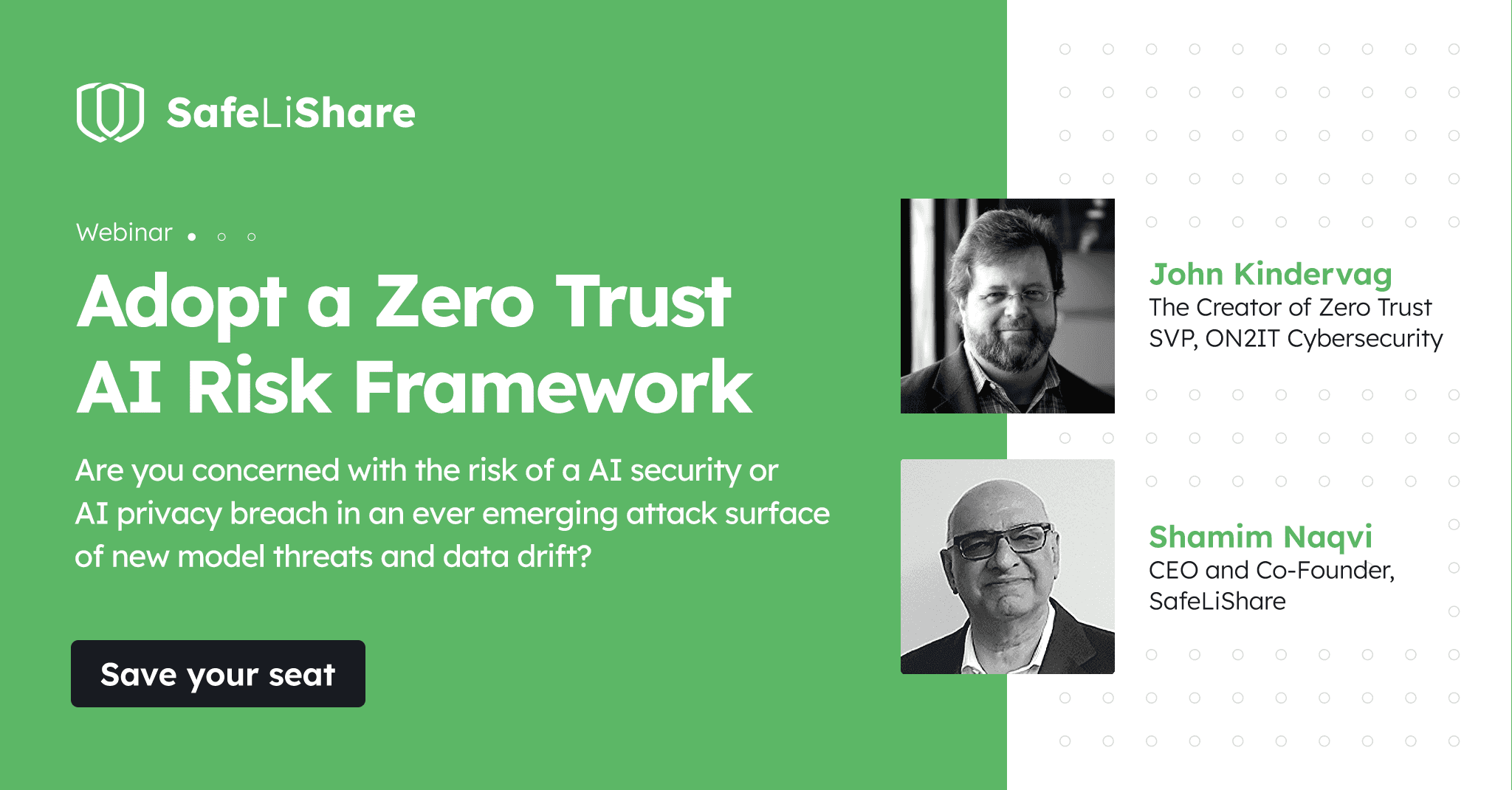

Are you concerned with the risk of a AI security or AI privacy breach in an ever emerging attack surface of new model threats and data drift? Do you need security built into the dynamics?

“2 in 5 organizations had an AI privacy breach or security incident, of which 1 in 4 were malicious attacks. Conventional controls ARE NOT enough. Attack surfaces are rapidly growing as AI becomes pervasive. Over 70% of enterprises have hundreds or thousands of AI models deployed, according to Gartner’s latest survey of AI adoption.” - Gartner

The interaction between the ML model, code, and data is iterative and cyclical, involving continuous refinement of the code and model based on insights gained from the data. This interplay is crucial for the development and improvement of ML models, enabling them to adapt, learn, and deliver accurate predictions

The rise of model adversarial attacks and AI privacy breaches has become a concerning issue in the field of AI. Model adversarial attacks with deliberate attempts manipulate AI systems by introducing carefully crafted inputs or queries that can deceive or mislead the models into making incorrect predictions. In this webinar, John Kindervag and Shamim Naqvi can help you to adopt a zero trust AI security framework so that you can close gaps and reduce complexity during ML, LLM, or AI model end-to-end lifecycle with SafeLiShare’s ConfidentialAI™.