How AI LLM Governance is Making Data Sharing Safer

With the implementation of AI, data processing has never been more central or more accessible. However, utilizing AI comes with the need for proper governance and regulation to ensure that data sharing is safe and ethical.

AI governance refers to the set of policies, procedures, and guidelines that ensure that AI systems operate ethically and responsibly.

With growing concerns about the governance of AI, how can your enterprise ensure that you still have full compliance control and privacy when sharing your data?

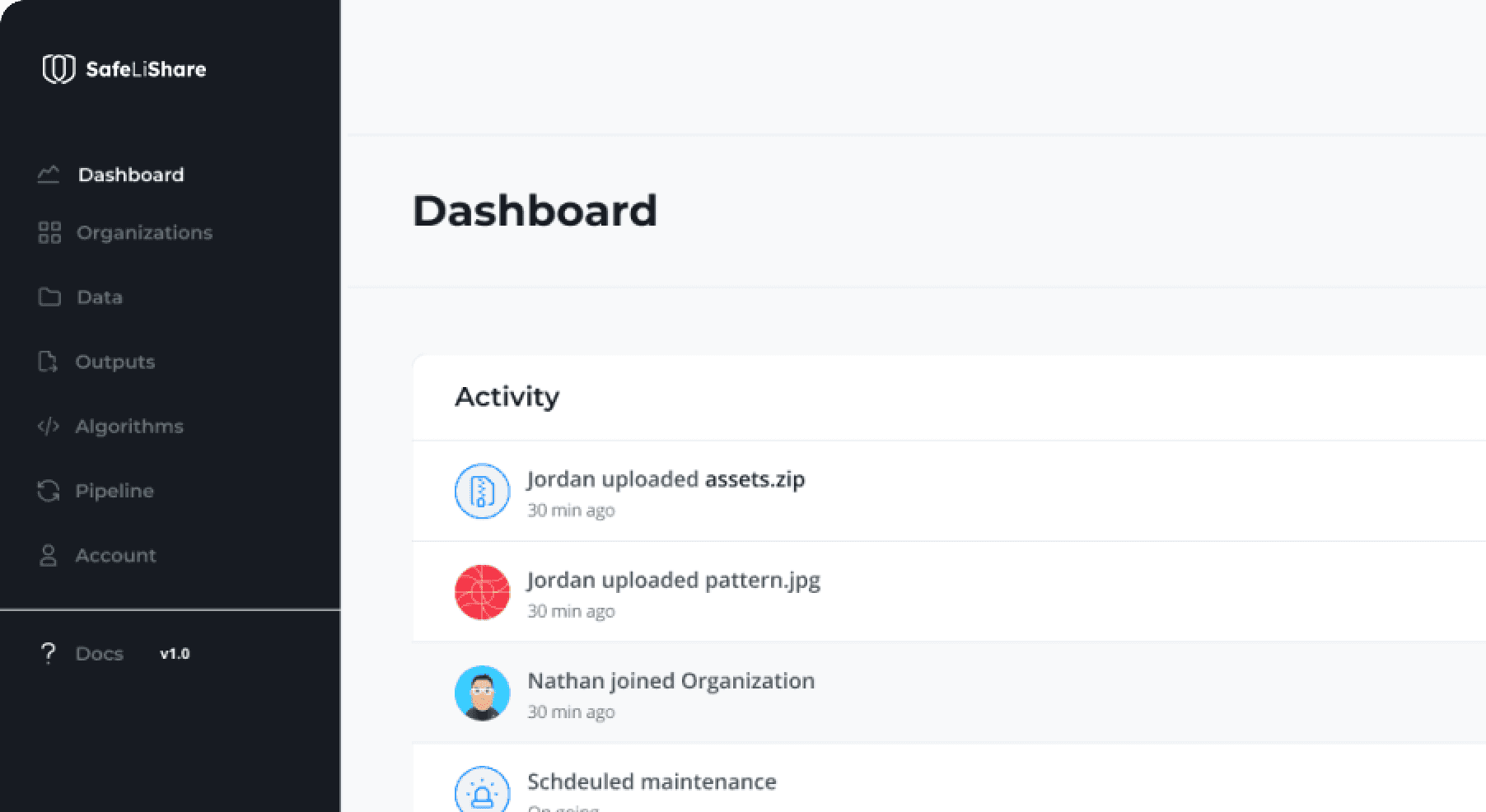

Experience worry-free data processing and AI modeling with SafeLiShare’s cutting-edge encryption platform. Our policy-controlled environment guarantees responsible AI operation, while protecting sensitive information, ensuring transparency, and eliminating AI bias.

Protecting Sensitive Data

One of the primary concerns with AI is that it requires large amounts of data to function effectively. This data can include sensitive information such as personal details, financial records, and health information.

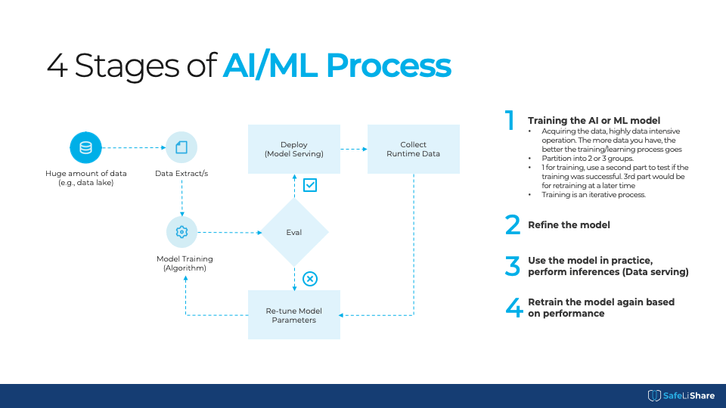

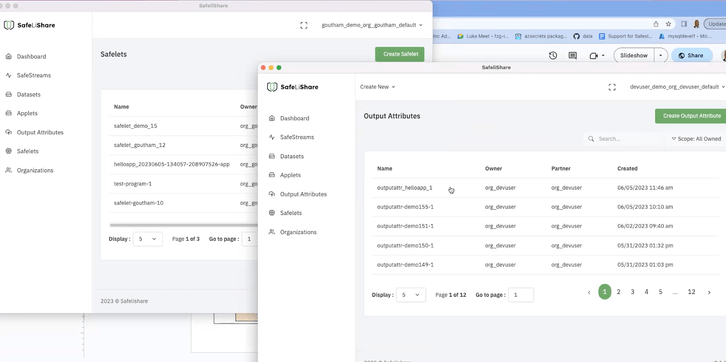

SafeLiShare locates data needed for processing in a data lake or data repository, extracts the data and may de-identify it. The de-identified data is then encrypted along with the AI model before beginning. This extracted data is immediately encrypted along with your AI model before beginning data processing, e.g., model training.

The encrypted assets are brought together in a secure enclave, where the data processing occurs with guaranteed security.

Once the model training is evaluated, the model can be deployed to serve using another secure enclave, once again creating a safeguard for your data and model to process large amounts of sensitive consumer data. The results outputted by the deployed model are encrypted and can only be decrypted by a predetermined consumer. Thus, further ensuring compliance control of the data, the model, and the results.

Ensuring Transparency

Each secure enclave used throughout the data sharing process produces a tamper- proof audit log of the actions that transpired in the secure enclave. These cryptographic signatures provide provable accounts of all actions occurring in the data sharing process.

Audit logs are important in data processing because they provide a record of all activities performed on the data. They can help to identify who accessed the data, what changes were made, and when they were made. This information is crucial for maintaining data integrity and security, as it allows for full transparency so that any malicious activity can be detected immediately.

Preventing AI Bias

When AI systems are trained on biased data or designed with inherent biases, they can perpetuate and even amplify existing biases in society. This can result in discrimination against certain groups of people in areas such as hiring, lending, and criminal justice. Additionally, AI bias can undermine the reliability and trustworthiness of AI systems, which can have significant consequences in fields such as healthcare and autonomous vehicles. One of the ways to prevent AI bias is through constant retraining of AI models. Ongoing training can help keep the team up-to-date with the latest techniques and technologies to prevent AI bias.

While many enterprises are weary of using sensitive data for numerous retrainings, SafeLiShare makes it easy to re-tune your model parameters and retrain your model within secure enclaves, so that you can retrain your data with encryption, ensuring your data and AI model are safe while advancing AI performance.

SafeLiShare recognizes the importance of AI governance in ensuring that data sharing remains safe and secure. By implementing proper AI governance practices, such as security, surveillance, and bias prevention, SafeLiShare can help businesses and organizations protect their data and the privacy of their clients. This approach not only benefits the organizations themselves but also promotes trust and confidence in the use of AI technology.

If you are interested in booking a demo or educational session, please contact info@safelishare.com or visit Confidential AI solution page for more information.

Suggested for you

February 21, 2024

RSAC 2024: What’s New

SafeLiShare unveils groundbreaking AI-powered solutions: the AI Sandbox and Privacy Guard in RSAC 2024

February 21, 2024

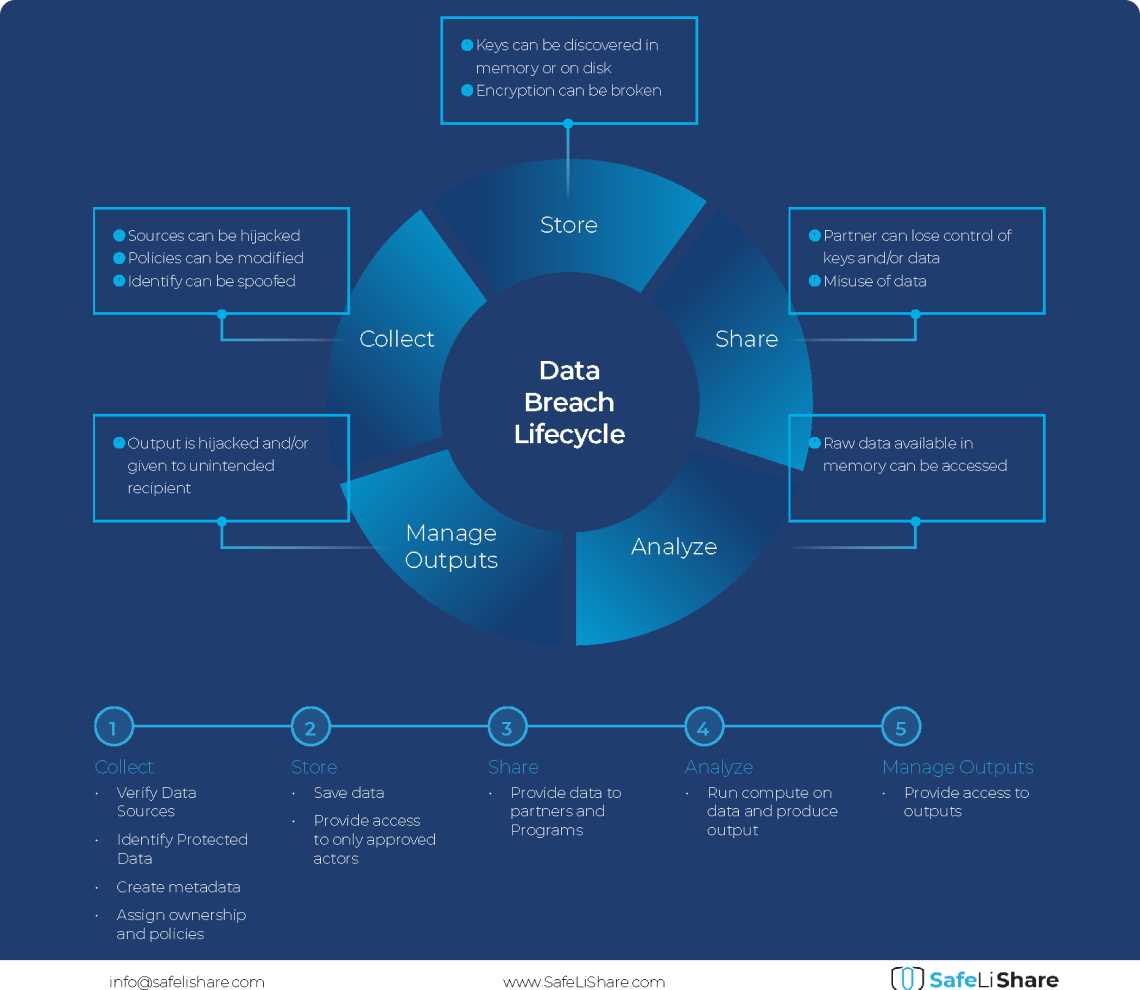

Cloud Data Breach Lifecycle Explained

During the data life cycle, sensitive information may be exposed to vulnerabilities in transfer, storage, and processing activities.

February 21, 2024

Bring Compute to Data

Predicting cloud data egress costs can be a daunting task, often leading to unexpected expenses post-collaboration and inference.

February 21, 2024

Zero Trust and LLM: Better Together

Cloud analytics inference and Zero Trust security principles are synergistic components that significantly enhance data-driven decision-making and cybersecurity resilience.